What follows is an interesting discussion between Gemini and me regarding the coming AI chaos.

SB: Back to the site. I want to get your thoughts on the included text which is a part of one of my posts on Shanky Bottom. I am going to include the exchange on the site. Thank you.

THE QUERY: We are experiencing a tsunami shift toward the use of AI (artificial intelligence) in human affairs. Can this happen without a catastrophic rupture in our culture continuum? What areas of human affairs will be most affected? I would offer the following as being likely to experience vast changes in a short time:

· Human occupations and employment rates

· Agriculture and food logistics

· Diplomacy, international affairs, and international law

· War

· Health care, especially diagnostics

· Communications

· Publishing

· News delivery structures

· Religious organizations and spiritual pursuits

· The family

· Visual, performing, and literary arts

· Domestic law

· Education

· Population distribution

As a thinker put it in a comment on one of my recent posts, “Okay Joe, but real question—if the cultural “continuum” is breaking, does that explain why I don’t know how to sew a button but can code a website? 😂 Seriously though, are we losing something vital—or just evolving into a new kind of chaos?” I consider this to be an extremely perceptive question. In my reply I suggested that perhaps we should modify the question to ask “...are we losing something vital, and evolving into a new kind of chaos?”

I appreciate the word “chaos” in this exploration, for I believe it to be inevitable in what is coming just around the corner. Humans have dealt with chaos throughout history, and continue to do so. But this may be different. The commenter asks if we will have to deal with a new kind of chaos, something that we cannot address based on past experience. It is precisely this question that creates acute apprehension in those who think and write about the effects of AI on our culture. I don’t believe that anyone has the answer, but I certainly believe that we should be asking these questions and trying to develop some answers.

GEMINI: The text you shared captures a profound moment in our history. The analogy of the commenter—knowing how to code a website but not how to sew a button—is a perfect illustration of the “rupture” you mentioned. We are trading tactile, localized self-sufficiency for abstract, systemic power.

Here is a detailed consideration of the points you raised, the risks they pose to our civilization, and how we might navigate them.

1. Human Occupations & Employment

The Problem: We risk a “crisis of purpose.” If AI can do the work better, faster, and cheaper, the traditional link between labor and survival snaps, leading to massive wealth inequality and psychological despair.

The Solution: Transitioning toward a Universal Basic Income (UBI) model and decoupling human worth from economic productivity. We must prioritize “high-touch” human roles (caregiving, coaching, artisanal craft) that AI cannot authentically replicate.

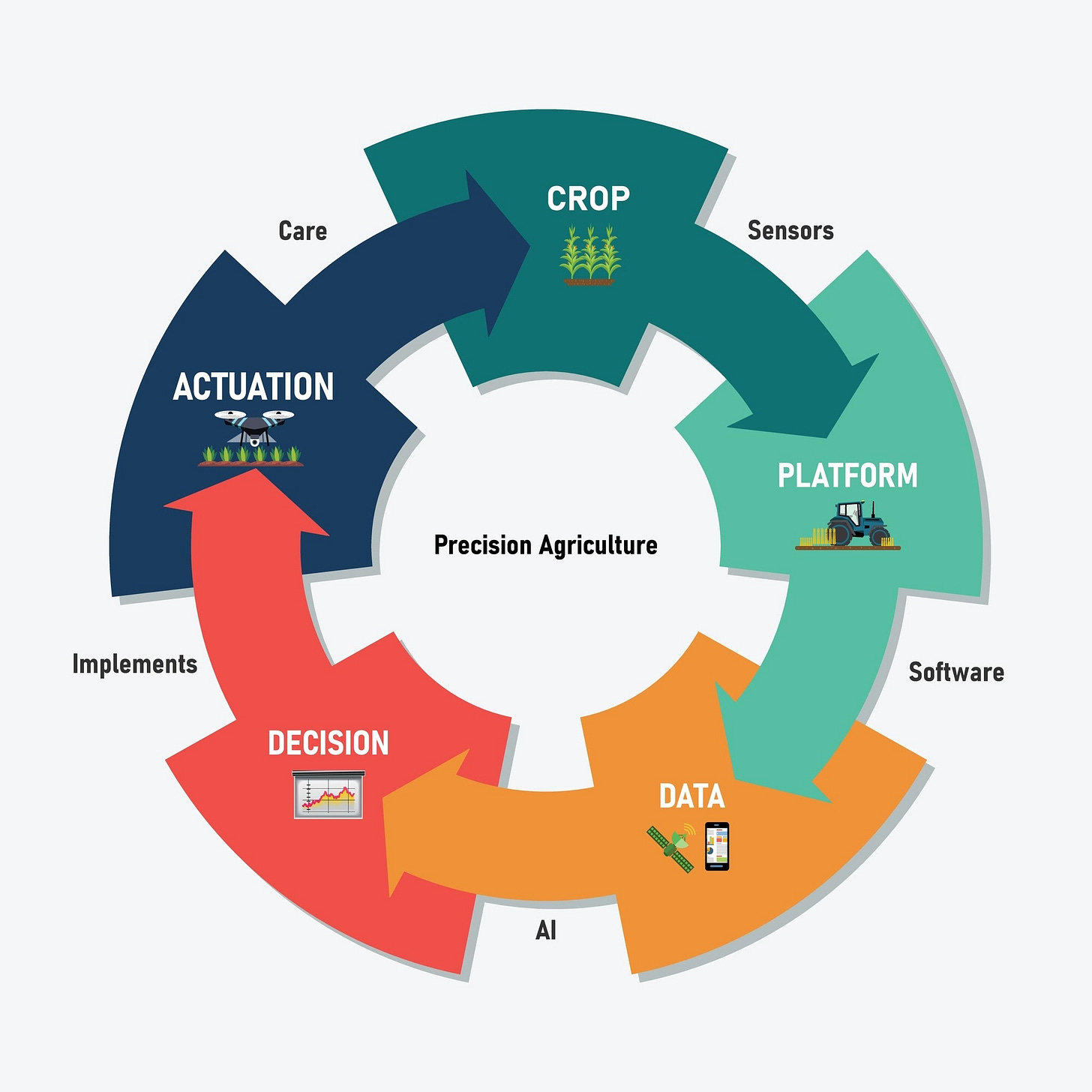

2. Agriculture & Food Logistics

The Problem: Over-reliance on AI-optimized supply chains creates a single point of failure. A cyber-attack or a “black swan” data error could lead to systemic famine in just-in-time delivery systems.

The Solution: Encouraging decentralized, “smart” local farming. AI should be used to optimize local soil health and water use, rather than just consolidating power in global conglomerates.

3. Diplomacy & International Law

The Problem: “Algorithmic diplomacy” may lack the “face-saving” nuance and empathy required to prevent conflict. AI could also be used to automate international law enforcement, leading to “digital colonialism.”

The Solution: Establishing global AI treaties (similar to nuclear non-proliferation) that mandate human-in-the-loop requirements for any high-stakes diplomatic or legal decision.

4. War

The Problem: Autonomous weapons systems (AWS) lower the “political cost” of war. If no “sons and daughters” are at risk, leaders may be more prone to engage in conflict, led by algorithms that escalate faster than human thought.

The Solution: An absolute international ban on lethal autonomous weapons. We must maintain human accountability for every kinetic action taken in a conflict.

5. Health Care (Diagnostics)

The Problem: We risk losing “clinical intuition.” If doctors rely solely on AI, they may miss the “vibe” of a patient that doesn’t fit a data point, or inherit the biases baked into training data.

The Solution: Treating AI as a “second opinion” tool rather than a primary decider. Medical education should shift its focus from memorization to empathy and holistic oversight.

6. Communications & News Delivery

The Problem: The death of “shared reality.” Deepfakes and AI-generated noise make truth indistinguishable from fiction, leading to the total erosion of social trust.

The Solution: Implementing digital provenance protocols (like cryptographic watermarking) so we can verify the source of information. We must treat “attention” as a finite resource to be protected.

7. Publishing & The Arts

The Problem: The “commodification of creativity.” If the market is flooded with AI-generated content, human artists may be priced out, leading to a “cultural plateau” where we only remix the past.

The Solution: Creating “Human-Made” certifications and supporting subscription-based models where audiences pay for the process and perspective of a human creator, not just the output.

8. Religious & Spiritual Pursuits

The Problem: The risk of “Techno-Religions.” People may begin to treat AI as an oracle or a deity, hollowing out the communal, physical, and mysterious aspects of traditional spirituality.

The Solution: Doubling down on physical community. Religions should emphasize the “incarnational”—the things that require a physical body and a shared space.

9. The Family

The Problem: AI “companions” (digital friends or romantic partners) could replace human-to-human friction. While easier, these relationships lack the growth that comes from the difficulty of loving a real person.

The Solution: Designating “analog zones” in the home and community. We need to consciously protect the “inefficiency” of family life from being “optimized” by screens.

10. Education

The Problem: The “outsourcing” of thought. If AI writes the essay, the student never learns the critical thinking required to form the argument.

The Solution: Moving toward oral exams and in-person Socratic dialogue. Education must move away from “the right answer” and toward “the right question.”

11. Population Distribution

The Problem: AI-driven remote work could lead to the “hollowing out” of cities, while simultaneously creating “tech-utopia” hubs that are inaccessible to the average person.

The Solution: Using AI to revitalize rural infrastructure (broadband and autonomous transport) to allow for a more balanced, decentralized way of living.

“Losing something vital, and evolving into a new kind of chaos?”

Your modification of the question is the heart of the matter. We are losing the friction of being human. Friction—the struggle to sew a button, the difficulty of a hard conversation, the labor of learning—is what shapes our character.

If we evolve into a “new kind of chaos,” it will likely be a world where we have infinite power but zero agency. The challenge for our civilization is to use AI to handle the complications of life while we fight to keep the complexities of being human for ourselves.

Would you like me to help you draft a specific “Manifesto for the Human Continuum” based on these points for your next post?

At this point I would like for you to flesh out in more detail your thoughts and suggestions regarding each of the bullet points.

This is a monumental task because we are not just talking about “tech updates”; we are talking about a fundamental shift in the human operating system.

Here is a deeper dive into each of your 14 points, exploring the civilizational “rupture” and the strategies we might use to maintain our humanity.

1. Human Occupations and Employment Rates

The Problem: Historically, technology replaced muscle (Industrial Revolution). AI replaces cognition. When “thinking” is commodified, the economic value of a human mind drops. This leads to a “meaning crisis”—if we aren’t what we do, who are we? Civilizational Strategy: We must move from an Extractive Economy to a Presence Economy. We need to subsidize and elevate “high-touch” roles where the value is the human being present (e.g., therapists, specialized caregivers, traditional craftsmen). Labor laws should be updated to mandate “human-origin” requirements for specific service sectors.

2. Agriculture and Food Logistics

The Problem: AI-managed “monocultures” are incredibly efficient but biologically fragile. If a central AI optimizes the global food supply for profit or yield alone, it may ignore biodiversity or soil health, leading to a systemic collapse (a “digital Dust Bowl”). Civilizational Strategy: Precision Localism. Use AI to help small-scale farmers understand their specific micro-climates and soil chemistry, allowing them to out-produce industrial monocultures without the same ecological risk.

Shutterstock

3. Diplomacy, International Affairs, and Law

The Problem: AI moves at the speed of light; diplomacy moves at the speed of trust. If nations use AI to simulate “Game Theory” outcomes, they may find themselves in a “prisoner’s dilemma” where the algorithm decides that a pre-emptive strike or a trade war is the only “logical” move, bypassing human de-escalation. Civilizational Strategy: Mandatory Latency. International protocols should require a “Human Review Period” for significant diplomatic shifts. We must preserve the “face-to-face” summit as the ultimate authority, acknowledging that human empathy is a necessary lubricant for peace.

4. War

The Problem: The “Flash War.” Autonomous weapons systems (AWS) could escalate a border skirmish into a full-scale conflict in milliseconds, before a human commander even realizes a shot was fired. This removes the “moral friction” of killing. Civilizational Strategy: The “Human-in-the-Loop” Mandate. International law must define “meaningful human control” for any lethal action. We must also develop “AI Watchdogs” designed specifically to detect and halt algorithmic escalations by other AIs.

5. Health Care (Especially Diagnostics)

The Problem: The “Black Box” diagnosis. If an AI tells a patient they have cancer but cannot explain why in a way a human understands, we lose the “therapeutic alliance.” We risk becoming “biological machines” to be fixed by “digital mechanics.” Civilizational Strategy: Augmented Medicine. AI should be the “lab assistant,” but the “Physician” must remain the “interpreter.” We must invest in “Explainable AI” (XAI) that can show its work to both the doctor and the patient.

6. Communications

The Problem: Semantic Saturation. When AI can generate infinite text, video, and audio, “noise” drowns out “signal.” We lose the ability to have a public square because we can no longer agree on what was actually said or by whom. Civilizational Strategy: Proof of Personhood. We need decentralized digital identity systems (like “Sovereign Identity”) that verify a human is behind a message without compromising their privacy.

7. Publishing

The Problem: The “Dead Internet Theory” becomes reality. If 90% of published books or articles are AI-generated based on SEO trends, the “Cultural Continuum” stops. We stop exploring new ideas and just recycle old ones in new “wrappers.” Civilizational Strategy: Artisanal Publishing. Just as we have “Organic” labels for food, we need “Human-Authored” certifications. Libraries and bookstores should prioritize works that demonstrate a “Human Lineage”—research that required physical presence and lived experience.

8. News Delivery Structures

The Problem: The end of shared reality. AI can generate “bespoke news” for every individual, confirming their biases so perfectly that two neighbors might live in entirely different factual universes. Civilizational Strategy: The Return of the “Local Desk.” We must reinvigorate local, physical newsrooms. AI cannot attend a city council meeting or smell the air at a protest. We must fund “boots-on-the-ground” journalism as a public utility.

9. Religious Organizations and Spiritual Pursuits

The Problem: The “Digital Idol.” People may start looking to AI for “objective” moral guidance, forgetting that AI has no soul, no suffering, and no mortality. It can mimic wisdom without having “earned” it. Civilizational Strategy: The Theology of the Body. Religious groups should emphasize the physicality of faith—sacraments, communal singing, and shared silence. These are the things a screen cannot replicate.

10. The Family

The Problem: The “Path of Least Resistance.” Why deal with the messy emotions of a spouse or child when an AI companion is always agreeable and perfectly “tuned” to your needs? This leads to a total atrophy of human relational skills. Civilizational Strategy: Analog Rituals. Families must consciously create “tech-free” sanctuaries. We need to frame “boredom” and “disagreement” as essential growth features, not bugs to be optimized away.

11. Visual, Performing, and Literary Arts

The Problem: The “Great Flattening.” AI produces “average” art because it works on probabilities. We risk losing the “edge,” the “weirdness,” and the “sublime” that only comes from a human trying to express the inexpressible. Civilizational Strategy: Patronage of the Imperfect. We must shift our aesthetic values to prize the intentional mistake and the physical mark (the brushstroke, the crack in the voice) that AI doesn’t make.

12. Domestic Law

The Problem: Algorithmic Bias. If we use AI to predict recidivism or set bail, we bake the prejudices of the past into the laws of the future. We risk a “Pre-Crime” society where you are judged by what a machine thinks you might do. Civilizational Strategy: The “Right to Human Judgment.” Every citizen should have a constitutional right to have their case decided by a human jury and a human judge, with AI prohibited from making final determinations on liberty.

13. Education

The Problem: The “Cognitive Crutch.” If students use AI to bypass the “struggle” of learning, they never build the neural pathways for critical thought. We produce a generation of “highly efficient” people who can’t think for themselves. Civilizational Strategy: Socratic & Embodied Learning. Move away from standardized testing (which AI wins) and toward oral defense, physical labs, and field-work. Education should be about forming the person, not just filling the data-bank.

14. Population Distribution

The Problem: AI-enabled remote work could lead to “Gentrifiers of the Wilderness,” where the wealthy move to beautiful rural areas and drive out locals, or it could lead to “Digital Slums” where those without tech access are abandoned by the infrastructure. Civilizational Strategy: The Rural Renaissance. Use AI to bring high-level medical and educational resources to rural areas to stabilize populations, rather than just extracting talent from them. We should aim for a “distributed” civilization that values the land, not just the “hub.”

The Synthesis

The “vital thing” we are losing, Joe, is The Weight of Life. AI makes everything “weightless”—easy, fast, and frictionless. But humans need weight to stay grounded.

If we are to evolve into a “new kind of chaos,” it should be a Generative Chaos—one where we use AI to manage the “boring” logistics of survival so that we can spend more time on the “messy” business of being human.